Trusted by :

Sales Pipeline Health Dashboard

Revenue growth and gross margin optimization are two of the most powerful levers for improving company profitability. Both require the ability to analyze performance across multiple dimensions—such as customer segments, deal size, discounting, and sales stages.

This dashboard analyzes the sales pipeline health of a fictitious Swiss company with a large sales organization. It provides a structured, drill-down view of pipeline volume, conversion rates, deal velocity, and risk concentration across regions and sales teams.

Designed for sales leadership and revenue operations, the analysis helps identify:

-

Bottlenecks in the sales funnel

-

Underperforming segments or territories

-

Risks related to over-reliance on specific deals or customers

-

Opportunities to improve forecast accuracy and revenue predictability

Due to the complexity of the data model and KPI logic, this type of dashboard is best suited for mature sales teams with well-defined CRM processes.

👉 Link to the live report

Predicting Sales Revenue Using Linear Regression

Accurate revenue forecasting is essential for budgeting, capacity planning, and strategic decision-making. This dashboard demonstrates how linear regression can be used to predict sales revenue based on key business drivers such as sales team size, advertising spend, and historical performance trends.

The analysis highlights the relationship between input variables and revenue outcomes, making it possible to quantify how changes in commercial investments impact expected sales results.

Designed for management and finance stakeholders, the dashboard helps:

-

Estimate future revenues under different business scenarios

-

Identify the most influential drivers of sales performance

-

Support data-driven decisions on hiring and marketing spend

-

Improve planning accuracy with a transparent, explainable model

While linear regression is intentionally simple, it provides a strong baseline model that is easy to interpret and communicate, making it especially useful for organizations starting with predictive analytics. 👉 Link to the live report

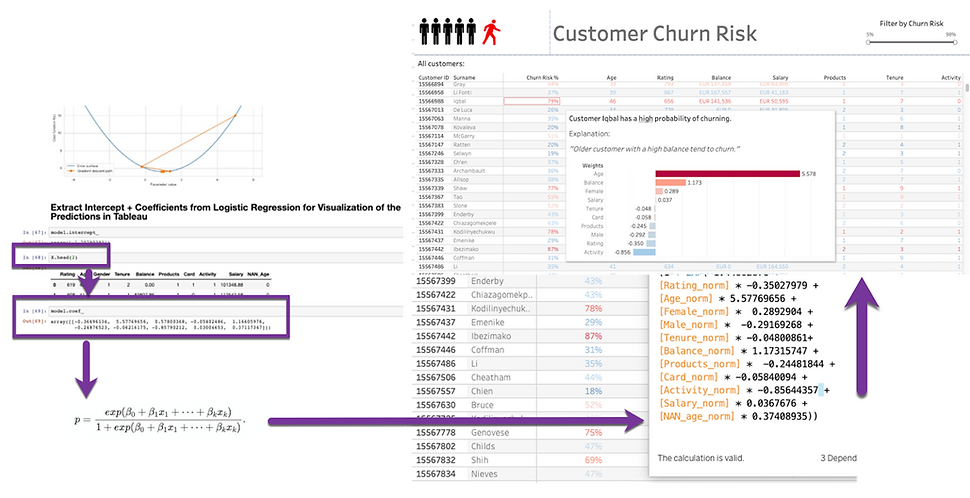

Customer Churn Prediction (Power BI / Tableau)

Understanding which customers are at risk of churning is critical for protecting recurring revenue and prioritizing retention efforts. This dashboard demonstrates how customer churn can be predicted using a lightweight machine learning approach, such as logistic regression, directly within Power BI or Tableau.

The model estimates the probability of churn based on behavioral and commercial indicators (e.g. usage patterns, tenure, contract characteristics, or support interactions). A strong emphasis is placed on model interpretability, ensuring that the underlying drivers of churn remain transparent and actionable.

Designed for customer success, sales, and management teams, the dashboard helps:

-

Identify high-risk customers before they churn

-

Understand the key factors driving churn behavior

-

Prioritize retention actions based on churn probability and customer value

-

Enable proactive, data-driven customer management

By using a mathematically deployable model, the solution avoids the need for a fully operational data science API. This makes churn prediction scalable, cost-efficient, and easy to integrate into existing BI environments such as Power BI or Tableau. 👉 Link to the live report

Combining the Flexibility of Excel with the Governance of Power BI

There are scenarios where companies need a full-blown reporting ecosystem like Power BI or Tableau (e.g., automated distribution, large-scale reporting). But there are many cases where Excel is still the best analytics layer—ad hoc analysis, fast exploration, and advanced modeling where Excel truly excels.

What if users could analyze data in Excel while Power BI remained the semantic layer—providing a single source of truth, centralized business logic, version control, governance, performance optimization, and incremental refresh?

This is possible by connecting Excel directly to a Power BI dataset.

This approach avoids two extremes:

Extreme 1: “Everything must be in Power BI”

Over-engineered dashboards, users forced into unfamiliar tools, and shadow Excel work anyway (because people will always export).

Extreme 2: “Everything stays in Excel”

Multiple versions of truth, manual refreshes, fragile formulas, and no governance.

Power BI for data modeling + Excel for analysis is pragmatic, scalable, and user-friendly.

By connecting Excel to a Power BI dataset, users get the best of both worlds: the flexibility of Excel with the governance of Power BI.

Root Cause Analytics

Root Cause Analysis (RCA) is a systematic approach for identifying the underlying factors that contribute to problems, failures, or undesirable outcomes. Rather than addressing surface-level symptoms, RCA aims to reduce recurrence by targeting the mechanisms or conditions that give rise to those outcomes.

A more advanced approach augments traditional RCA with techniques from Machine Learning. One such technique is feature selection, a class of statistical and algorithmic methods used to identify variables that are most informative with respect to an outcome of interest. Feature selection can be used to reduce dimensionality, limit noise, and prioritize variables that warrant closer investigation.

In practice, feature selection may include simple methods such as removing low-variance features, as well as more advanced approaches based on statistical dependence, regularization, or model-based importance measures. When used alongside RCA, these methods help narrow a large set of potential contributing factors to a smaller set of plausible candidates for root cause investigation.

Advantages of Combining Machine Learning Feature Selection with Root Cause Analysis

-

Enables analysis of large and complex datasets to surface variables associated with potential root causes

-

Can reveal non-obvious patterns and relationships that may not emerge through qualitative analysis alone

-

Supports interpretability: selected variables can be examined, tested, and validated through real-world investigation rather than treated as opaque model outputs

Pro tip: Be cautious when interpreting patterns. Feature selection identifies associations, not causation. Establishing true causal relationships requires domain knowledge, experimental validation, or causal inference methods. Correlation alone does not imply that one factor causes another.

What-If Forecasting (my book)

For most businesses, today’s environment is simply too volatile for traditional forecasting. Single-number forecasts create a false sense of certainty when leaders actually need to understand a range of possible futures. What if forecasting makes that possible.

What if forecasting lets you explore questions such as:

· What if revenue decreases by 5%. What’s our worst case?

· Which 80/20 revenue drivers should we double down on?

· How would a shock—like a COVID-style event—impact EBITDA?

By simulating thousands of scenarios, what-if forecasting quantifies uncertainty, highlights risk, and reveals the levers that truly move results. This clarity enables faster, more confident decisions—spotting risks earlier, testing interventions instantly, and reallocating resources.

Together, they create a rapid decision loop:

🔍 Modeling → 🎯 Deciding → ⚡ Acting

This agile cycle allows organizations to forecast smarter, adapt faster, and grow stronger in an increasingly volatile world.

My book is available on Amazon (link)

Statistical Hypothesis Testing: Are We Promoting More Men Than Women?

Statistical hypothesis testing is a method used to determine whether there is sufficient evidence in sample data to draw conclusions about a larger population. The process typically involves testing a null hypothesis (H₀)—which represents no effect or no difference—against an alternative hypothesis (H₁), which represents a competing claim.

In this fictitious example, 203 men and 117 women were promoted. The question we want to answer is whether this observed difference provides statistical evidence that the company favors men over women in promotions.

Some difference in promotion counts is always expected due to random variation. Hypothesis testing helps us assess whether the observed difference is statistically significant, meaning it is unlikely to have occurred by chance alone under the assumption that the null hypothesis is true.

To evaluate this, we conduct a statistical test comparing promotion outcomes by gender. The test produces a p-value of 0.0225. Using a commonly accepted significance level (α) of 0.05, the p-value is smaller than the threshold. Therefore, we reject the null hypothesis of no difference in promotion rates in favor of the alternative hypothesis that promotion rates differ by gender.

This result indicates that the observed difference in promotions between men and women is statistically significant for this sample.

Important note: Statistical significance does not imply causation or intent. This result alone does not prove discrimination or bias. Other factors—such as role, tenure, performance, department, or applicant pool composition—may explain part or all of the observed difference. Hypothesis testing identifies whether a difference exists, not why it exists.

Statistical Hypothesis Testing: Part 2

In the example above, I used a permutation hypothesis test to evaluate whether the company may be favoring men in promotions. While formal hypothesis testing is still relatively uncommon in business intelligence and descriptive analytics, it can be extremely valuable when decisions require statistical justification rather than intuition alone.

Permutation-based hypothesis testing offers an intuitive alternative to classical closed-form tests (such as the t-test). Instead of relying on distributional assumptions, permutation tests simulate the null hypothesis directly from the data. This approach often provides a better conceptual understanding of uncertainty and variability, especially for practitioners working with real-world datasets.

Many graduate and Ph.D. programs now require students to apply hypothesis testing rigorously. While these methods can be challenging, they play an important role in teaching disciplined reasoning about evidence, randomness, and inference.

In practice, modern data analysis frequently implements hypothesis testing using permutation tests, particularly in programmatic environments such as Python or R in R-Studio:

A permutation test is a non-parametric hypothesis test that evaluates whether an observed effect—such as a difference between groups—is likely to have occurred by chance. It does this by repeatedly shuffling (permuting) group labels to simulate the null hypothesis of no effect, generating a reference distribution of the test statistic. The p-value is then computed as the proportion of permuted outcomes that are at least as extreme as the observed result.

Sales Pipeline Analysis

To increase a company’s profitability, a significant share of data analytics efforts should focus on sales revenue, followed by gross margin and operating margin (revenues / EBIT). In practice, a 1% increase in sales almost always has a greater impact on profitability than a 1% reduction in costs.

The dashboard shown below presents a sales pipeline analysis for a fictitious Swiss company. The pipeline is segmented into three time horizons:

-

Sales pipeline: 0–30 days

-

Sales pipeline: 1–6 months

-

Sales pipeline: >6 months

The primary objective of this analysis is to generate actionable insights. For example, CHF 2.1 million—nearly 60% of the total pipeline—is overdue. This insight alone can represent significant added value, as it immediately highlights where management attention is required.

However, collecting and structuring the data needed to produce such an analysis within a CRM system (e.g., Pipedrive or Salesforce) requires considerable effort. As a result, this type of analysis is most suitable for medium to large sales organizations, where the potential benefits clearly outweigh the setup and maintenance costs.

Outlier Detection Analysis

Detecting outliers in data analytics is often useful for identifying exceptionally good or bad observations across areas such as sales, regions, products, accounting, or payments. Outliers are frequently highlighted visually—for example, by displaying regular data points in gray and outliers in red—to make deviations immediately apparent.

The sample dashboard below illustrates an outlier detection approach based on standard deviation.

In practice, two popular methods are commonly used for outlier detection:

-

Standard deviation

This approach identifies outliers based on their distance from the mean (e.g., beyond two standard deviations, covering roughly 95% of the data). It is widely used when the data is approximately normally distributed. -

Interquartile Range (IQR)

The IQR measures the spread of the middle 50% of a dataset and is calculated as the difference between the third quartile (Q3, 75th percentile) and the first quartile (Q1, 25th percentile). This method is robust to skewed data and does not assume a normal distribution, although the resulting visualizations can be more difficult to interpret.

Employee Contribution Indicators

The dashboard below illustrates an approach to people analytics in which employees are positioned across two dimensions: a skill score (x-axis) and salary (y-axis, reversed). The target quadrant highlights individuals who combine strong skill profiles with comparatively lower compensation.

The skill score is derived from a curated mix of soft and hard skills, aggregated into a single composite indicator.

The visualization is built in Tableau, which is particularly strong at representing sets and groupings. Set theory—a branch of discrete mathematics—studies collections of objects and provides a useful conceptual foundation for this type of analysis, where individuals can belong to multiple overlapping skill sets.

This dashboard demonstrates how multi-variable data can be combined to analyze performance signals and support exploratory insights. It is not intended to assess personal worth or replace human judgment, but rather to inform discussion and guide further analysis.

Link to the live dashboard.

Battery Capacity Analysis (Weibull Survival)

For warranty and reliability purposes, it is often important to understand how long a product is expected to last. This applies across many industries and products—such as coffee machines, IoT devices, household appliances, or, in this example, electric vehicle batteries.

Battery degradation is a key reliability challenge for electric vehicles. Temperature is known to significantly influence battery aging, yet its impact is often difficult to quantify in a simple and interpretable way. This example demonstrates how Weibull survival analysis can be used to model battery reliability under different climate conditions.

Scenario Definition (Synthetic Dataset)

Battery survival probabilities are evaluated at 100,000 km of usage under three climate scenarios:

-

Warm climate (≈ Latitude 30°N)

-

Mild climate (≈ Latitude 45°N)

-

Cold climate (≈ Latitude 60°N)

Climate is approximated using latitude as a proxy for long-term temperature exposure, allowing the effect of environmental conditions on battery reliability to be illustrated in a simple and intuitive way.

Note: This analysis is illustrative and based on synthetic data. It is intended to demonstrate modeling techniques and analytical reasoning rather than predict real-world battery performance.

Optimizing to Minimize Costs or Maximize Revenue

Linear Programming (LP) is an optimization technique widely used in business to achieve the best possible outcome—such as minimizing costs or maximizing profit—by modeling linear relationships subject to a set of constraints. Common applications include production planning, resource allocation, logistics optimization, and demand-based pricing.

The fictitious example below demonstrates how total shipping costs can be minimized using Excel’s Solver.

While optimization problems can be mathematically complex and are often the domain of engineers or operations researchers, data analysts can play a valuable supporting role. Using tools such as Excel, Power BI, or Python, analysts can help structure problems, explore scenarios, and deliver practical solutions that reduce costs or increase revenues.